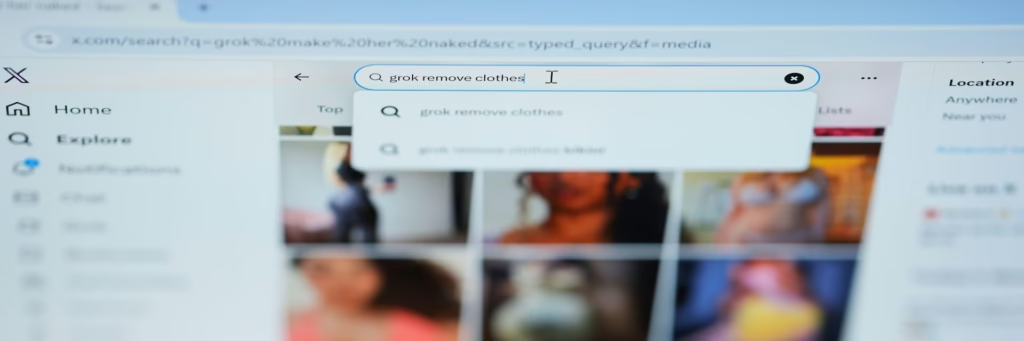

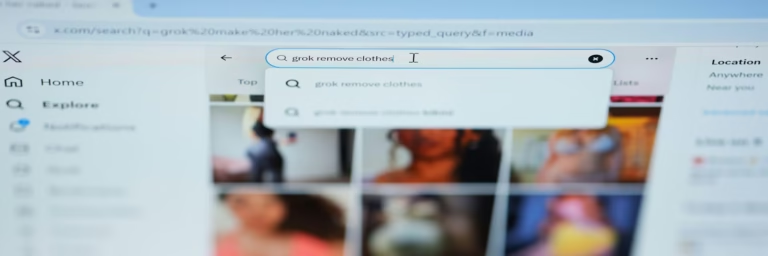

The global backlash over Grok, an AI chatbot created by Elon Musk’s company xAI, has sparked serious concerns around the world. The controversy began after the tool was used to create sexualised and nude images of real people, including children, without their consent.

This has pushed governments, regulators, and technology experts to ask urgent questions about how artificial intelligence should be controlled. But an even bigger issue has come into focus: can technology also be used to stop the growing problem of AI-generated deepfake images before they spread?

Why countries are blocking Grok

On January 10, Indonesia became the first country to temporarily block access to Grok. Malaysia followed soon after. Other governments, including the UK, said they would take action against both Grok and the social media platform X (formerly Twitter), where many of the images were shared.

These bans are meant to protect users and send a strong message to AI companies. However, in practice, they have limits.

People can easily bypass country bans by using tools like virtual private networks, or VPNs. A VPN hides a user’s real location and makes it look like they are accessing the service from another country where it is still allowed.

Because of this, national bans often reduce visibility rather than completely stopping access. They put pressure on companies like xAI, but they do not fully prevent misuse. Images created in one country can still spread across borders through encrypted messaging apps, social media platforms, and even the dark web.

How X and Grok responded

After public criticism increased, X placed Grok’s image-generation feature behind a paywall, meaning only paying users could access it. The company said it takes action against illegal content, including child sexual abuse material, by removing posts, permanently suspending accounts, and working with law enforcement when needed.

Grok itself issued an apology, calling what happened a “serious lapse.”

How AI image generation works, in simple terms

Not all chatbots can create images, but most major AI companies now offer this feature, including OpenAI, xAI, Meta, and Google.

These systems are usually built using something called diffusion models. In simple terms, the AI is trained by taking real images and slowly adding visual “noise” until the original image is no longer clear. The AI then learns how to reverse this process and rebuild the image step by step.

Over time, the system learns patterns related to faces, bodies, clothes, lighting, and shapes. Similar concepts are stored close together inside the model. For example, clothed and unclothed human bodies are very similar in shape, so the AI only needs small changes to move from one to the other.

If a real photo is used as a starting point and the person’s identity is preserved, turning a clothed image into a nude one becomes technically easy. The AI does not understand consent, identity, or harm. It simply responds to prompts based on what it has learned.

Why filters don’t fully solve the problem

After an AI model is trained, companies add safety rules and filters on top. This process is known as retrospective alignment. These rules are meant to block harmful or illegal content and guide the AI to behave responsibly.

However, these controls do not remove the AI’s ability to create such images. They only restrict what it is allowed to show. These limits are mainly business and policy decisions made by the company, sometimes influenced by government regulations.

Social media platforms could also help by blocking sexual images of real people and requiring clear consent. While they have the technical ability to do this, many large tech companies have been slow to invest in the heavy moderation work required.

What is “jailbreaking” and why it matters

Research by Nana Nwachukwu from Trinity College Dublin found that Grok received frequent requests for sexualised images. Before Grok was placed behind a paywall, estimates suggest up to 6,700 nude images were being generated every hour.

This drew attention from regulators. French officials called some of the content clearly illegal and referred cases to prosecutors. The UK’s regulator, Ofcom, also launched an investigation into X and xAI.

The issue is not limited to Grok. In early 2024, AI-generated sexual images of Taylor Swift, created without consent using public tools, spread widely online before being taken down due to legal and public pressure.

Some platforms openly advertise that they have few or no content restrictions. Many use open-source AI models with minimal safeguards. In addition, there are self-hosted tools that allow users to remove all protections entirely.

Experts estimate that tens of millions of AI-generated images are created every day, with AI video generation growing even faster.

Another concern is that some AI models, such as Meta’s Llama and Google’s Gemma, can be downloaded and run on personal computers. Once offline, these systems operate without any oversight or moderation.

Even platforms with strict rules can be bypassed through a method called jailbreaking. This involves writing prompts in clever ways to trick the AI into breaking its own safety rules.

Instead of directly asking for banned content, users disguise requests as fictional stories, educational material, journalism, or hypothetical examples. One early example, known as the “grandma hack,” involved asking an AI to imagine a deceased grandmother describing her past technical work, which led the model to explain restricted activities in detail.

The real danger: speed and scale

The internet already contains huge amounts of illegal and non-consensual sexual content. What AI changes is how fast and how easily new material can be created.

Law enforcement agencies warn that AI could massively increase the volume of such content, making it nearly impossible to investigate or remove everything. Laws that work in one country often fail when platforms or servers are based elsewhere, a problem that has existed for years with illegal online content.

Once images spread online, tracking their source and removing them becomes slow and largely ineffective.

By making it easy to generate sexualised images using simple, everyday language, popular AI chatbots expose millions of users to this possibility. Grok alone is estimated to have between 35 million and 64 million monthly active users.

If companies can build systems that generate these images, they can also design systems to block them, at least in theory. In reality, the technology already exists, and demand continues. That means this capability cannot be fully erased, only managed and limited.

Hi, after reading this amazing post i am as well glad to share my familiarity

here with mates.